My final year at MIT was about getting things live. After years of building with co-founders, I launched my first solo project from a dark room in Cape Town, connected to my hotspot during load shedding. The spring semester was packed with classes and research, but by fall I shifted focus: seeking out courses that connected me with potential co-founders and exposed me to ambitious problems in unfamiliar industries.

The year was a series of experiments in turning ideas into reality, each building on lessons from the last. Here's what happened.

Building in Real-Time

The first project started with a question: what if we could generate entire stores in response to real-time search trends? While juggling psets and research in the spring semester, I began building a system that could track Google searches and, in response, spin up personalized stores in real-time. Could we use AI to design new products when nothing matched what people wanted?

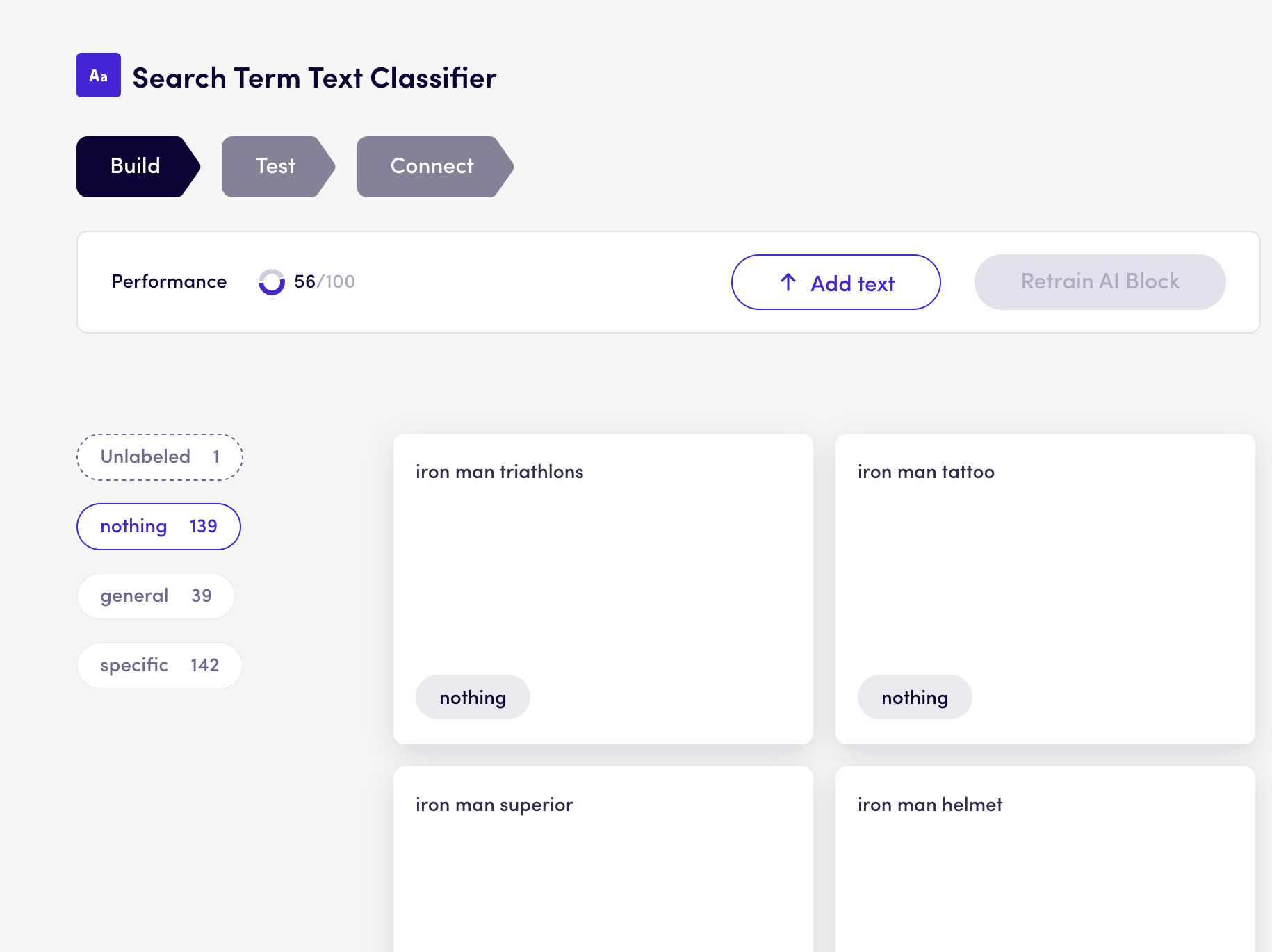

Working with a classmate, we started small - testing with Shopify stores to validate the concept. Kids' clothes emerged as our clear winner, so we built out a full pipeline: SEMRush for tracking search trends, Levity to classify product opportunities, and a custom system using GPT to generate taglines, Stable Diffusion for graphics, and Python scripts to assemble the designs. We automated everything through n8n workflows.

The technical stack evolved as we experimented. For image generation, we used Replicate's fine-tuning features to train Stable Diffusion on popular illustration styles from successful products. (Pro tip: use Glitch.com for hosting training data - just drag files into storage for an instant public link.)

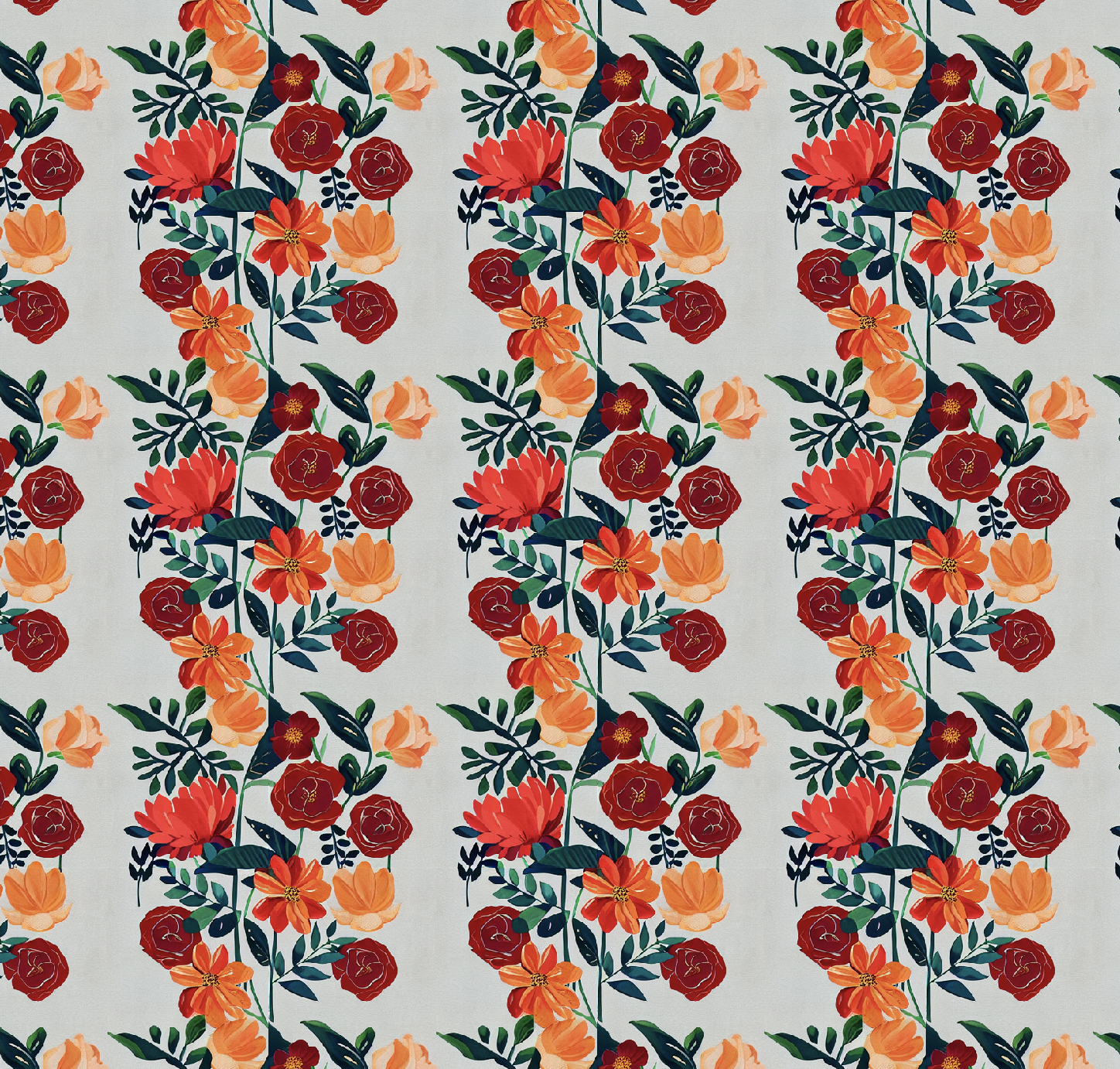

We developed a pattern generation system that could turn any image into seamless repeating designs, perfect for fabric prints.

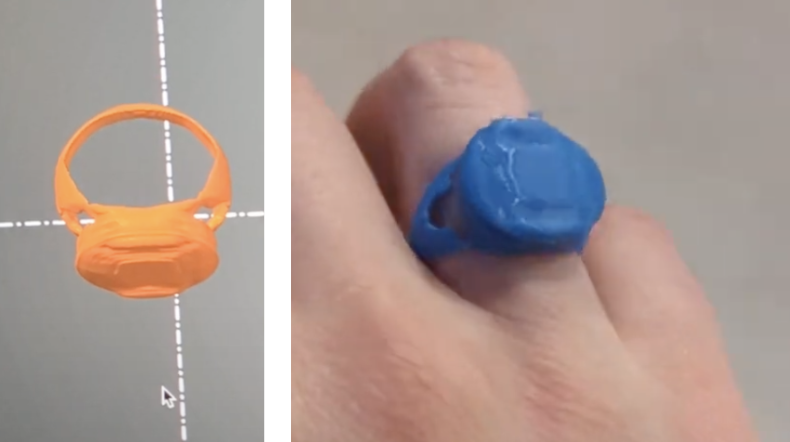

We didn't stop at 2D designs. Using research and tools like Shap-e and Text2Mesh, we started experimenting with generating entire 3D products. We could generate rings, vases, and other objects with AI-designed patterns and textures, then actually print them.

The project taught me important lessons about technical choices. My teammate Zack had a great eye for scalable tools and architecture. But our commitment to open-source solutions sometimes meant spending days on features that could have taken hours with services like AWS. It was a constant balance between building it right and building it fast.

Pivoting to Search

As finals approached, the project started losing steam. Zack raised money for his other startup and moved on, leaving me wondering if this was something I was actually interested in working on. The technology worked, but something wasn't clicking. I started thinking about using our tools more directly - what if, instead of generating products, we helped people discover products in a more personalized way?

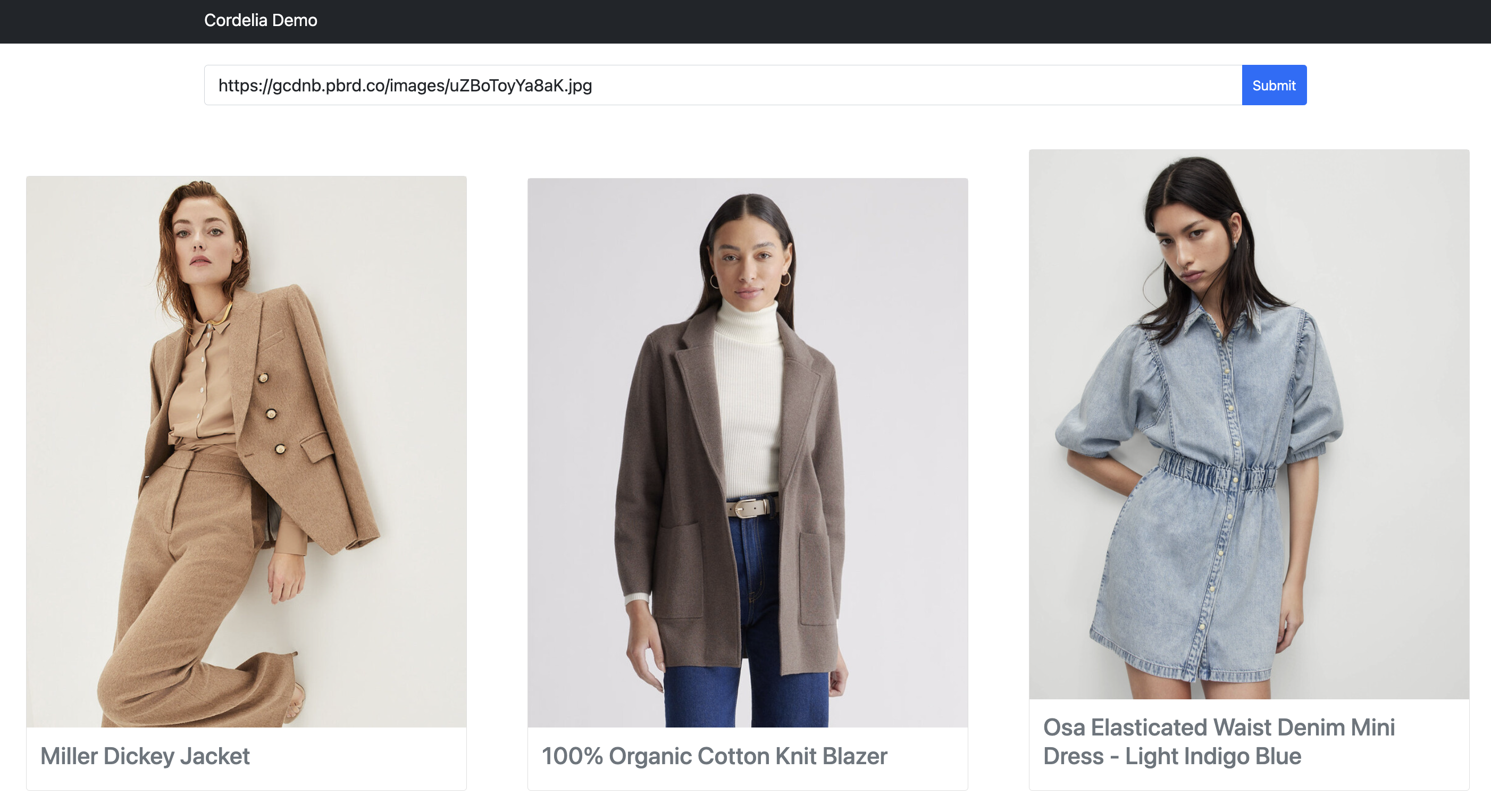

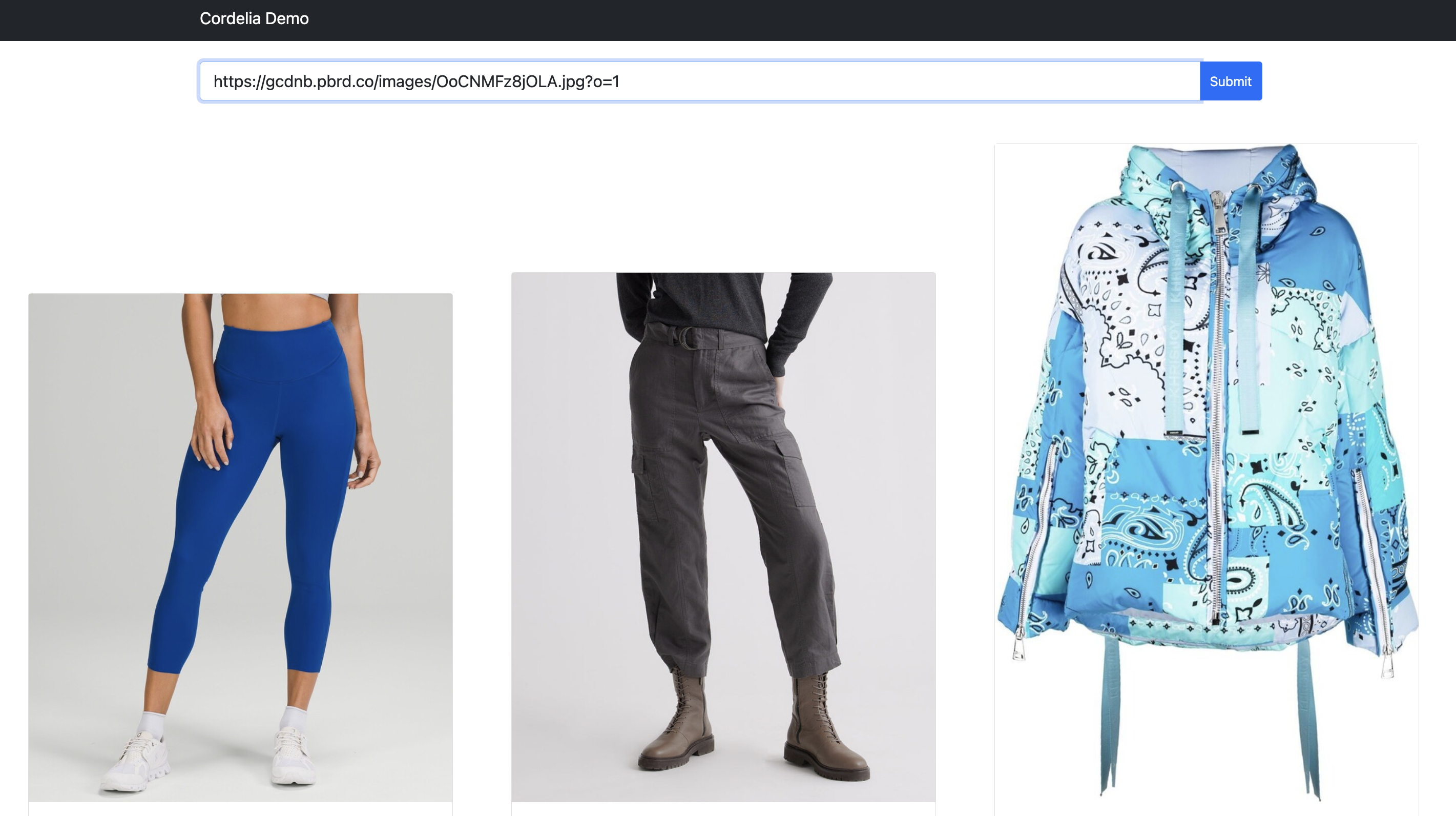

I threw together a quick prototype: a chatbot built with GPT-4 (starter code here), embedded in a Webflow site. It would ask users about their style preferences, then use their responses to curate mystery boxes of clothes. The implementation was dead simple - a Colab notebook using CLIP to match their conversation responses with products from our catalog. I launched it as Mystery Stylist, curious to see if anyone would use it.

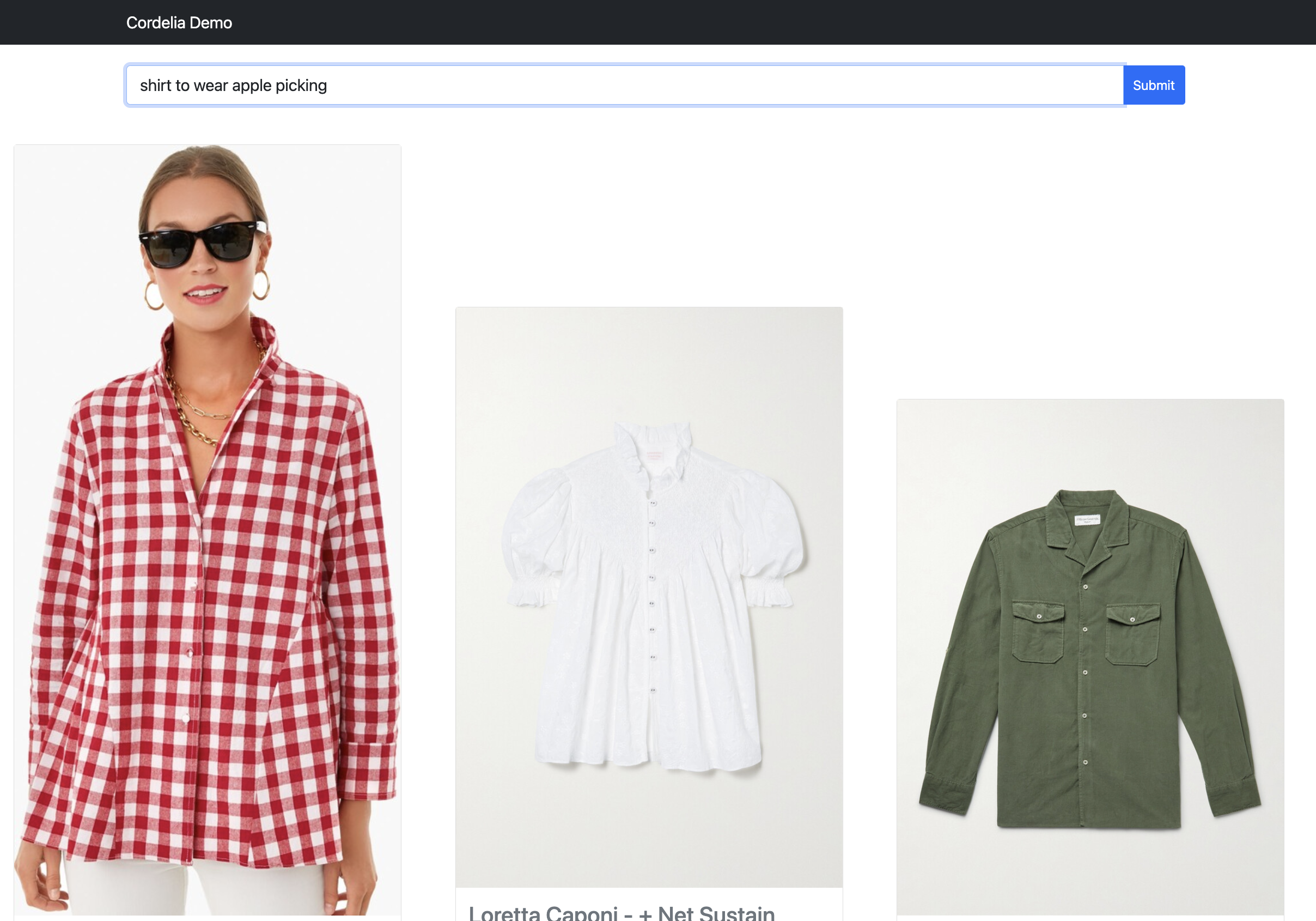

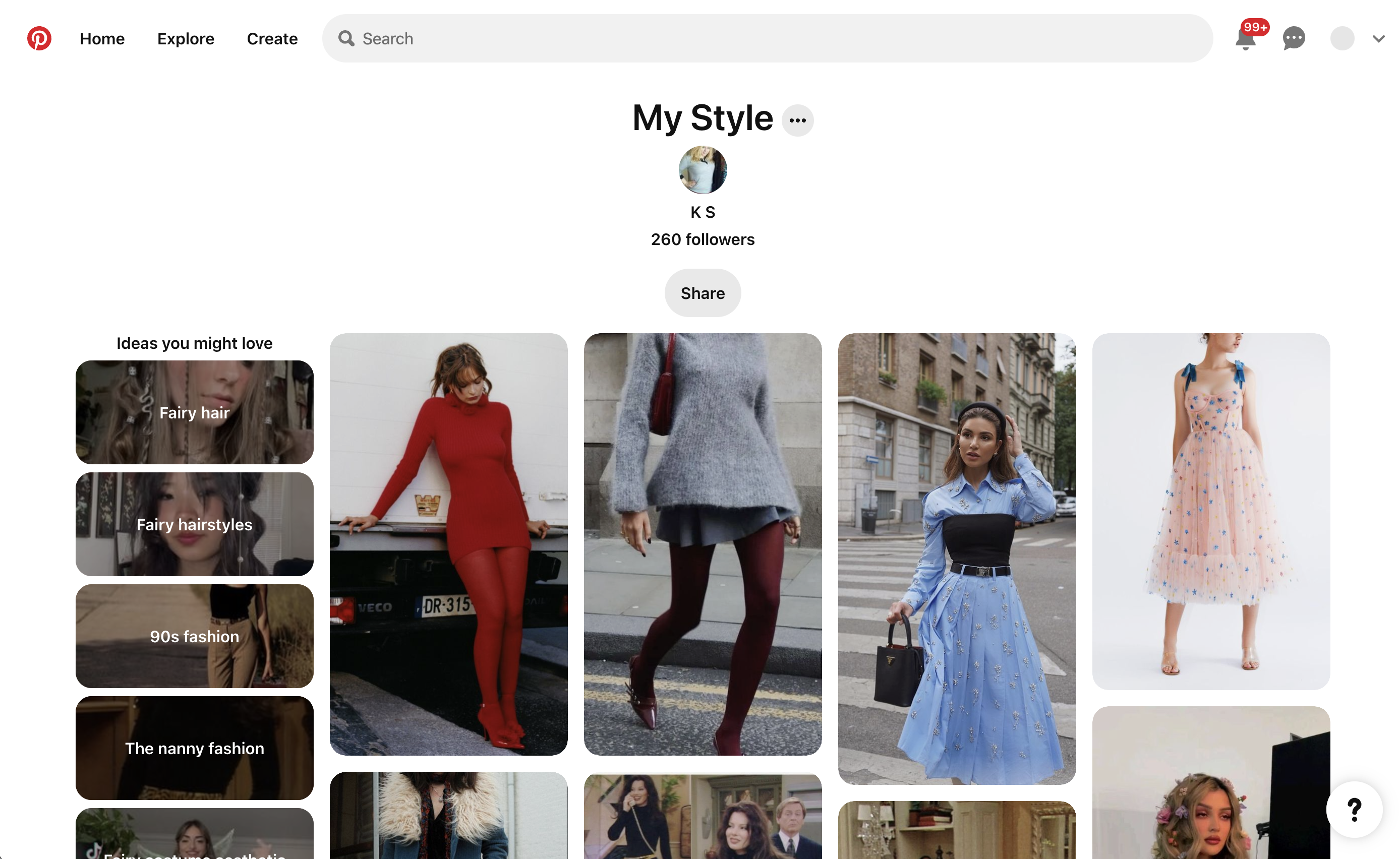

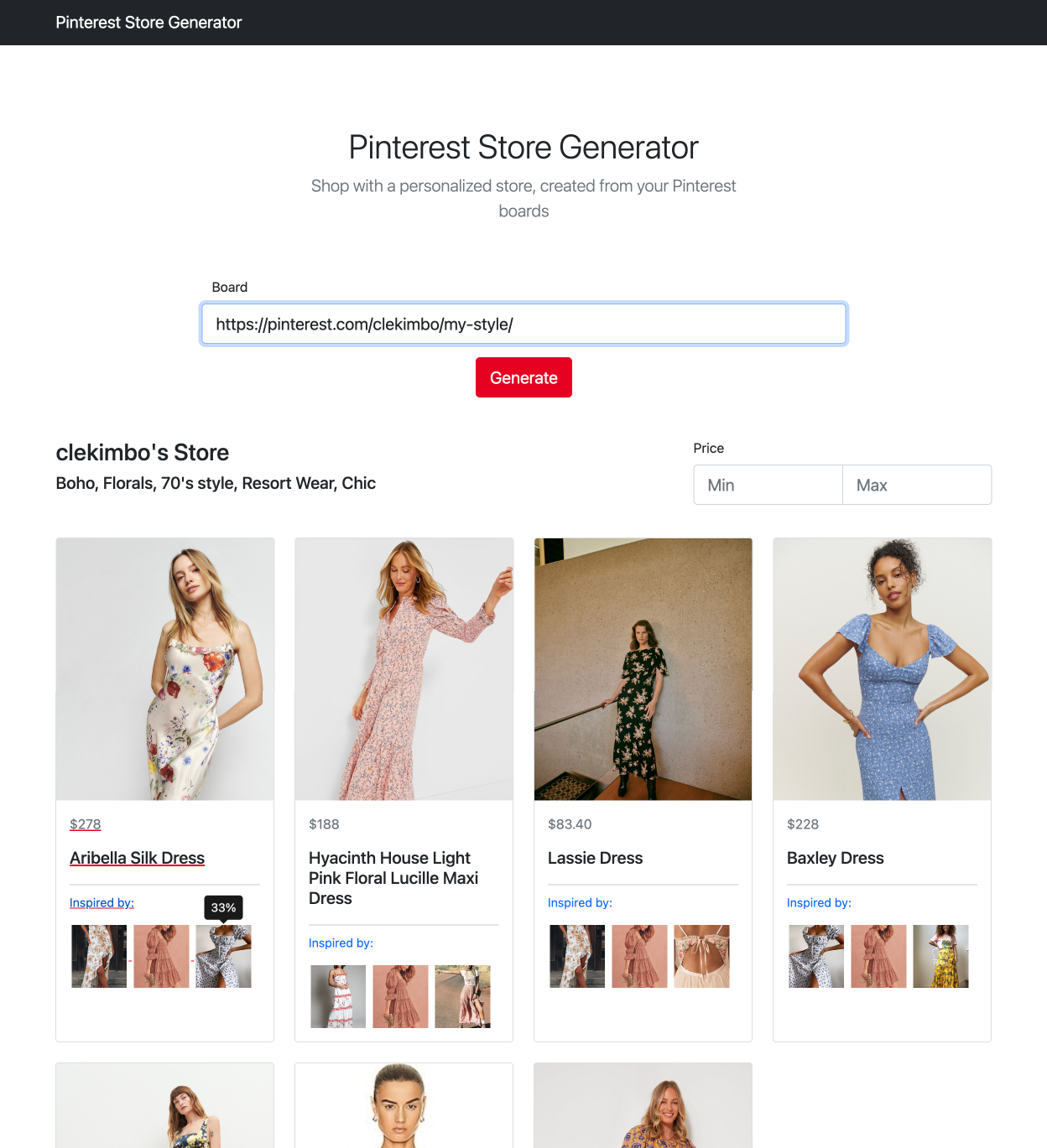

After posting on Reddit, orders started coming in immediately. The first two days brought in $1,220.76 in sales. But what really caught my attention wasn't the sales - it was how well CLIP was matching products to natural language descriptions. This evolved into two new experiments: Mystery Stylist, where users could search for clothes by describing occasions ("shirt to wear apple picking"), and Pinterest Store, where they could use their Pinterest boards to find similar items.

The technical implementation was straightforward. Collective Voice's API provided the product catalog and handled affiliate payments, while Zenrows helped scrape Pinterest boards. The system would generate CLIP embeddings for both the Pinterest images and our product catalog, then use similarity matching to find recommendations. I added an explainability feature that showed users how each reference image influenced their results.

import os

import time

import random

import requests

import replicate

import numpy as np

from typing import List, Dict, Any

# Configuration

COLLECTIVE_VOICE_API_URL = "https://api.collectivevoice.com/api/v2/products"

CLIP_MODEL_ID = "andreasjansson/clip-features"

CLIP_VERSION_ID = "75b33f253f7714a281ad3e9b28f63e3232d583716ef6718f2e46641077ea040a"

USER_AGENTS = [

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_5) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.1.1 Safari/605.1.15',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.97 Safari/537.36',

]

class ProductEmbeddingGenerator:

def __init__(self, api_key: str, replicate_token: str):

self.api_key = api_key

os.environ["REPLICATE_API_TOKEN"] = replicate_token

self.clip_model = replicate.models.get(CLIP_MODEL_ID)

self.clip_version = self.clip_model.versions.get(CLIP_VERSION_ID)

def fetch_products(self, offset: int, limit: int = 50) -> List[Dict[str, Any]]:

"""Fetch products from Collective Voice API."""

params = {

'pid': self.api_key,

'offset': offset,

'limit': limit,

}

response = requests.get(

COLLECTIVE_VOICE_API_URL,

params=params,

headers={'User-Agent': random.choice(USER_AGENTS)}

)

response.raise_for_status()

return response.json()["products"]

def generate_embedding(self, image_url: str) -> List[float]:

"""Generate CLIP embedding for an image URL."""

outputs = self.clip_version.predict(inputs=image_url)

return outputs[0]["embedding"]

def process_products(self, existing_ids: List[str] = None) -> tuple:

"""Process products and generate embeddings."""

images, product_ids, prices, embeddings = [], [], [], []

existing_ids = existing_ids or []

for offset in range(0, 4000, 50):

if offset % 100 == 0:

print(f"Processing offset: {offset}")

try:

products = self.fetch_products(offset)

for product in products:

if (product["inStock"] and

product["id"] not in product_ids and

product["id"] not in existing_ids):

images.append(product["image"]["sizes"]["XLarge"]["url"])

product_ids.append(product["id"])

prices.append(product["price"])

time.sleep(1) # Rate limiting

except requests.exceptions.RequestException as e:

print(f"Error fetching products: {e}")

continue

# Generate embeddings for all collected images

for i, image_url in enumerate(images):

if i % 100 == 0:

print(f"Generating embedding {i}/{len(images)}")

try:

embedding = self.generate_embedding(image_url)

embeddings.append(embedding)

except Exception as e:

print(f"Error generating embedding: {e}")

continue

return product_ids, prices, embeddings

def save_data(self, ids: List[str], prices: List[float], embeddings: List[List[float]]):

"""Save processed data to file."""

with open('data.py', 'w') as f:

f.write(f'ids_global = {ids}\n')

f.write(f'prices_global = {prices}\n')

f.write(f'embeddings = {embeddings}\n')

def main():

from data import ids_global, prices_global, embeddings as existing_embeddings

generator = ProductEmbeddingGenerator(

api_key="YOUR_API_KEY",

replicate_token="YOUR_REPLICATE_TOKEN"

)

new_ids, new_prices, new_embeddings = generator.process_products(existing_ids=ids_global)

# Combine new and existing data

all_ids = ids_global + new_ids

all_prices = prices_global + new_prices

all_embeddings = existing_embeddings + new_embeddings

print(f"Total products processed: {len(all_ids)}")

generator.save_data(all_ids, all_prices, all_embeddings)

if __name__ == "__main__":

main()Code to create CLIP embeddings from products on the Collective Voice API. It looks like they may have taken their documentation down, so this might be useful to someone.

I presented the MVP at the MIT Imagination in Action Summit and started to reach out to traditional retail stores for potential partnerships. But retail proved to be retail. Returns ate into profits, and I couldn't control quality across suppliers. More fundamentally, I realized I wasn't interested in building yet another fashion marketplace - even an AI-powered one. The technology worked, but was this really the problem I wanted to solve?

Beyond Retail

But what if we could make this more general? A personalization plugin instead of yet another marketplace? In July, I grabbed my laptop from my summer internship, threw it in my backpack, and jumped on a plane Thursday night to Paris. The ETHGlobal hackathon seemed like the perfect place to test a bigger idea: taking what we'd learned about personalization and applying it to any website.

I found teammates on Discord who wanted to build something similar, and we spent the weekend building a proof of concept. The idea was simple but powerful - what if we could take the same technology that powers targeted ads, but use it to create genuinely useful, personalized experiences? We built a dev tool that could adapt any website's content based on user preferences, without requiring them to give up personal data.

The project resonated with judges and won $3,900 in prizes. But more importantly, it showed me there was a path beyond retail. The same core technology - understanding user intent and matching it to content - could be applied to much bigger problems.

Barcelona Pit-stop

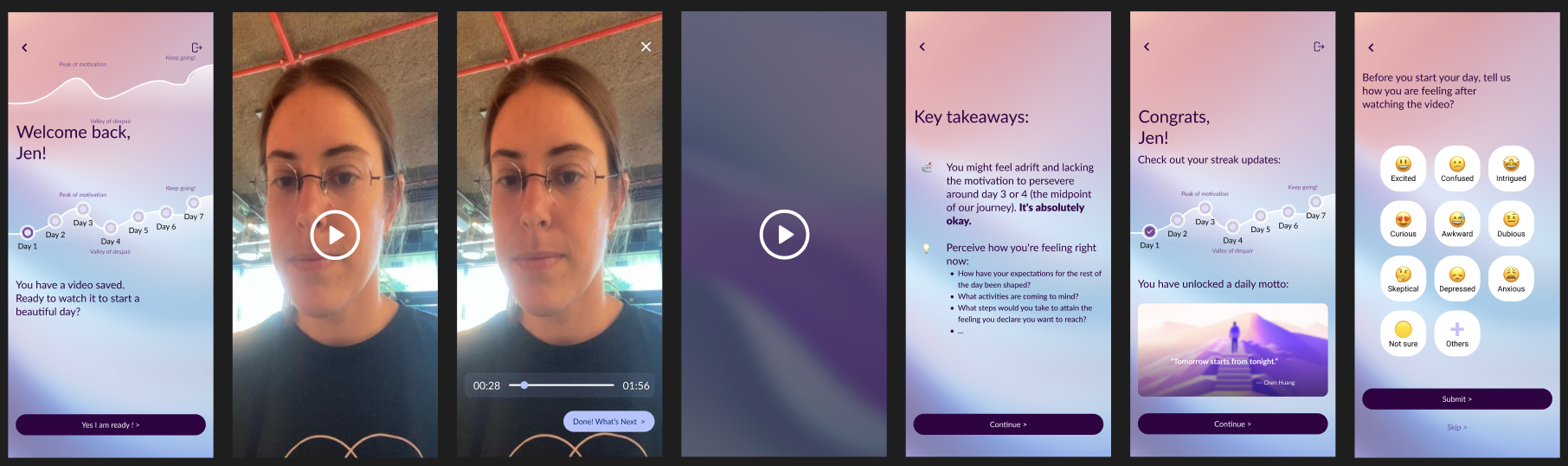

Later in the summer I met up with another classmate, Gabby, in Barcelona to help build a goal-setting app for her thesis research. I had never used Flutter before, but we made it work - three days of coding during the day and swimming at night, ending with a functional MVP. Building something complete in just three days (during what turned out to be Barcelona's hottest day on record) was a much-needed reminder of what's possible with focused time.

This quick win felt especially significant after the drawn-out launches of Mystery Stylist and Pinterest Store. Those projects had taught me about the complexity of keeping services running long-term - dealing with changing APIs, managing costs, maintaining infrastructure. In contrast, the Barcelona project showed the value of a focused sprint: clear scope, quick execution, immediate results.

Tools for Creativity

In October, I joined the MIT AI Conference hackathon, meeting Eric over Discord the first night. Our conversation centered around the question: how can we move beyond manipulating pixels to contextual design? We started exploring what a contextually-aware image editor might look like.

The implementation bridged brand new research and a novel web UI into a working prototype. Zero-1-to-3 let us generate different views of objects from single images. Meta's Segment Anything helped us understand and manipulate specific parts of photos. Putting these together, we built something that felt like the future of image editing - software that understood what you were trying to edit, not just which pixels you were selecting.

Mattr

The fall brought one more pivot. With IDM classmates Eden and Katie, I entered the MIT Generative AI Ignite Competition with a different kind of problem: data bias in AI systems. We'd seen how AI tools could fail in subtle but important ways - voice assistants struggling with elderly users, health apps not working for people with different mobility patterns. Our solution used generative AI to audit and augment training data, helping companies build more representative models.

The project resonated beyond our expectations. Selected as finalists from over 100 teams, we won $5,000 and were featured in MIT News. But more importantly, it felt like we'd found a way to use AI to solve structural problems, not just build interesting demos.

What's Next?

I'm writing this in early 2024, looking back at a year of rapid prototypes and technical experiments. The last few months have taken an unexpected turn - a casual meeting with Artem and Serge where we played around with silly AI-generated videos has turned into something much bigger than I could have anticipated.

Video generated with the Magic Animate on Replicate

Special thanks to MIT Sandbox for supporting the chaos.